Blueprint for LLM Integration in Enterprise Platforms

Enterprises want useful, safe, and fast LLM features, not demos. This blueprint translates strategy into production for media and content platform engineering, marketing, and brand teams. It assumes regulated data, tight SLAs, and multi-tenant constraints.

Architecture first: adopt a serverless architecture for elasticity at inference spikes, but anchor state in durable services. Pair API Gateways with auth, an event bus, and function compositions. Keep inference calls stateless; store prompts, templates, evaluation results, and user decisions in a versioned metadata store. This gives rollback, lineage, and reproducibility.

Model portfolio and routing

Run a portfolio: Claude for long-context analysis and safe drafting, Gemini for multimodal understanding and tool use, and Grok for fast brainstorming or exploratory queries. Build a router with intent detection, latency budget, and policy tags. Example policies: "public copywriting," "internal analytics," "PII restricted." The router chooses a model, temperature, and context window based on policy and cost.

- Cold start: pre-warm serverless containers for popular routes to meet p95 under 500 ms.

- Fallbacks: if a provider rate-limits, degrade gracefully to cached snippets or distilled summaries.

- A/B layers: ship shadow traffic to a challenger model and compare structured outcomes, not vibes.

Context, memory, and RAG done right

RAG succeeds when documents are clean and queries are shaped. Build an ingestion pipeline with language detection, boilerplate removal, and chunking tuned to your models' token windows. Store embeddings per tenant, with ACLs at document and paragraph levels. Use hybrid search: BM25 plus vector similarity to hold relevance under diverse queries.

For media workflows, enrich assets with captions, transcript segments, entities, and rights metadata. Gemini can generate shot lists; Claude can draft headlines; Grok can brainstorm variants. Persist user edits alongside AI outputs to train future prompts and guard against drift.

Determinism and guardrails

Wrap generation with deterministic validators. Use JSON Schemas, regex, and policy engines to enforce brand tone, prohibited claims, or jurisdictional rules. Before publishing SEO pages, run fact checks against internal knowledge and log citations. For ad ops, constrain outputs to approved vocab and character limits.

- Prompt templates live in Git with semantic diffs and approvals.

- Sensitive inputs are redacted at the edge; store only hashes and feature vectors.

- Monitor toxicity, PII leakage, and hallucination scores via offline evaluation sets.

Latency, cost, and reliability

Adopt a three-tier latency budget: sub-300 ms for autocomplete, sub-1 s for inline suggestions, and 2-5 s for heavy analysis. Stream tokens to feel instant. Cache at three layers: prompt+context fingerprint, retrieval results, and post-processed outputs. Track cost per request and per goal; abort long generations when ROI drops.

Reliability means backpressure and idempotency. Use request IDs, exactly-once orchestration, and replayable events. When providers wobble, switch to a distilled small model for extractive tasks to keep workflows unblocked.

Security, compliance, and tenancy

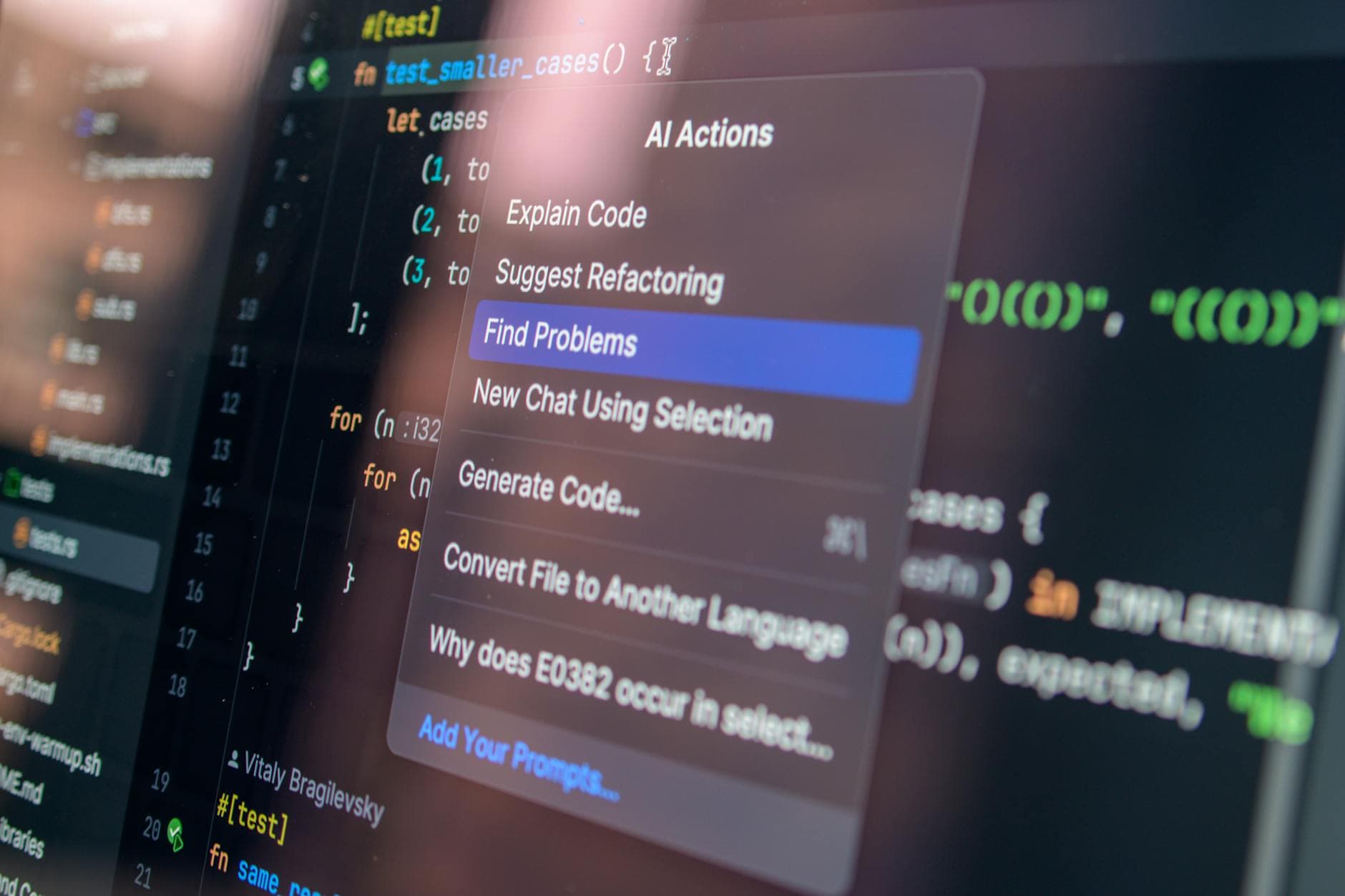

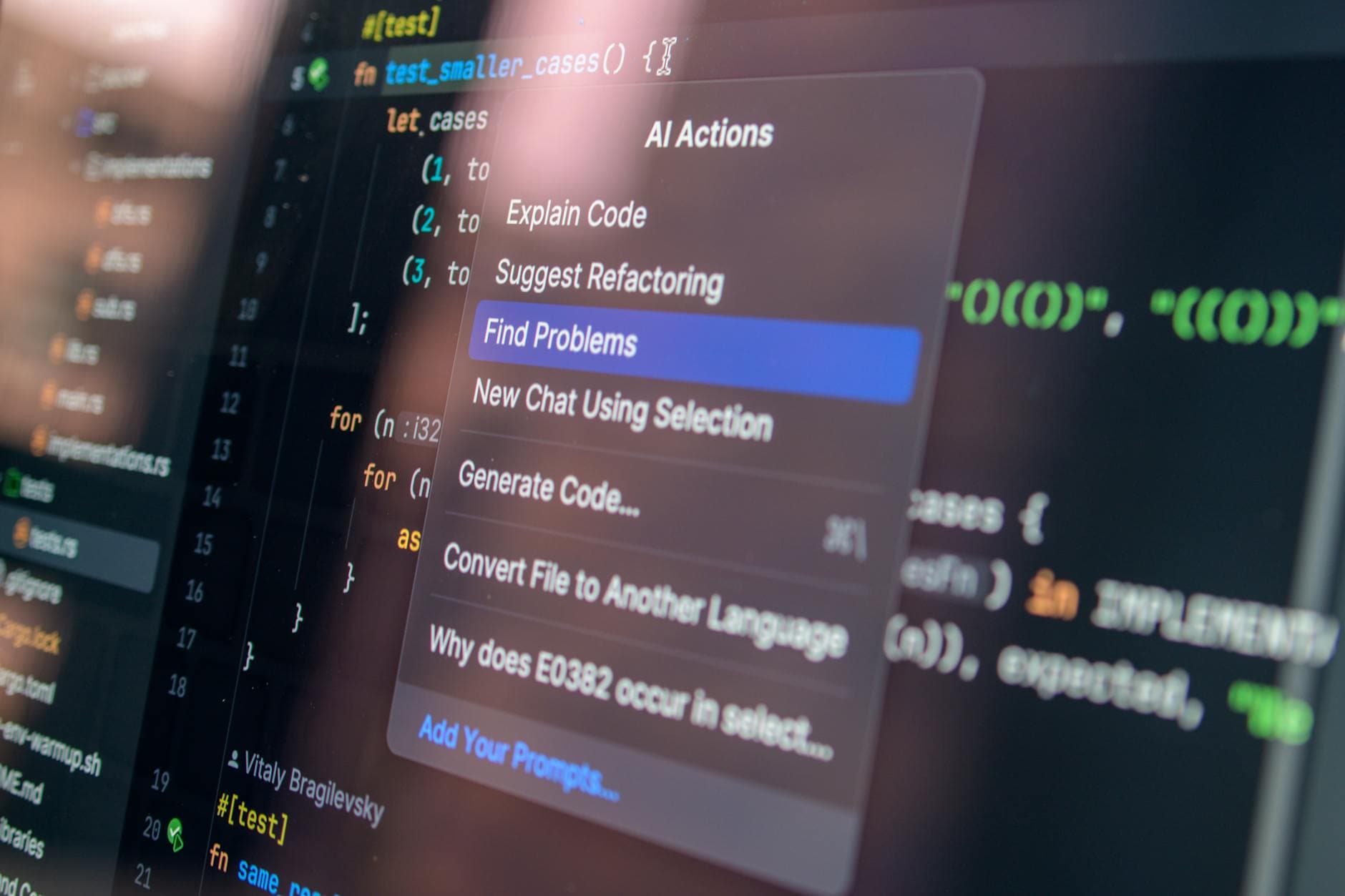

Implement fine-grained roles for editors, marketers, and analysts. Segregate tenants in embedding stores and logs. Offer regional processing options. For audits, keep an immutable trail of inputs, prompts, model versions, and human approvals. Code review and technical audit services should verify prompt libraries, privilege boundaries, and third-party dependencies every quarter.

Evaluation beyond BLEU

Create gold sets per use case: editorial headlines, support answers, policy compliance. Score with task-specific rubrics and human adjudication. Measure groundedness, citation match, and brand tone. Track regressions across model and prompt changes before rollout.

Use-case patterns that ship

Content ideation: ingest briefs, brand voice, and prior winners; route to Grok for variants, then Claude for long-form drafts with citations. SEO at scale: Gemini extracts entities, generates outlines, and fills data tables via tools. Rights and risk: automated rights checks plus claims validation before distribution.

- Moderation: multimodal Gemini flags risky frames; policy engine blocks publishing.

- Localization: dual-pass approach-translate with deterministic glossary, then tone-tune with constrained prompts.

- Insights: summarize campaign lift with structured JSON for BI tools.

Team and process

Cross-functional squads own features end to end. Product defines outcome metrics; engineering codifies policy; legal sets guardrails. Bring in partners like slashdev.io when you need specialized media and content platform engineering or a staffed guild for serverless architecture, data pipelines, and rapid integrations.

From pilot to platform

Start with a narrow, high-value loop, wire evaluators and telemetry first, then add models. Freeze interfaces early: prompt contracts, schema outputs, and tool protocols. As use grows, shard tenants, compress embeddings, and negotiate committed use with providers. Your LLM platform should feel invisible: dependable, audited, and quietly accelerating every campaign and brand touchpoint. Own the layer, not the model; ship value with measurable, governable AI capabilities across your enterprise.