Blueprint for Integrating LLMs into Enterprise Applications

Large language models are no longer R&D curiosities; they are new runtime surfaces. This blueprint distills proven patterns for embedding Claude, Gemini, and Grok into mission-critical systems in media and content platform engineering, marketing stacks, and B2B SaaS-all with a serverless architecture mindset and enterprise guardrails.

1) Frame high-value use cases and hard KPIs

Pick narrow tasks with measurable outcomes. Tie LLM outputs to concrete business metrics rather than fuzzy "insights."

- Editorial brief generation: time-to-first-draft ↓ 60%, SEO click-through ↑ 10%.

- Customer support summarization: handle time ↓ 25%, CSAT ↑ 6 points.

- Ad-ops classification: policy violations flagged with 95% precision.

2) Design the data and context strategy

RAG (retrieval-augmented generation) beats fine-tuning for most enterprise cases. Start with retrieval quality; hallucinations drop when the model sees the right passages at the right time.

- Create data contracts and normalize content into chunks with provenance.

- Use hybrid search (BM25 + dense vectors) with freshness signals.

- Attach per-chunk access control tags; enforce at retrieval time.

3) Choose an architecture that lets you move fast safely

Default to serverless architecture for elastic, event-driven loads. On AWS, pair API Gateway + Lambda + Step Functions + EventBridge; on GCP, Cloud Run + Pub/Sub + Workflows; on Azure, Functions + Event Grid. For high throughput, use KNative on Kubernetes with autoscaling.

In media and content platform engineering, stream assets through a moderation pipeline, then route to LLM services via a broker layer. Keep model calls stateless; persist session context in a fast store like Redis or DynamoDB.

- Gateway with request validation, rate limits, and schema checks.

- Policy engine (OPA or Cedar) for authorization and safety rules.

- Feature store and vector DB (Featureform, Feast, Pinecone, or pgvector).

- Async orchestrator (Step Functions, Temporal) for multi-step tool use.

- Observable cache (Fastly, CloudFront, or CDN KV) for hot prompts.

4) Model routing: Claude, Gemini, and Grok

Route by capability and constraint. Claude excels at long-context reasoning and safe drafting, Gemini shines at multimodal and Google ecosystem grounding, and Grok is nimble for real-time streams. Build deterministic fallbacks and allow tenant-level overrides.

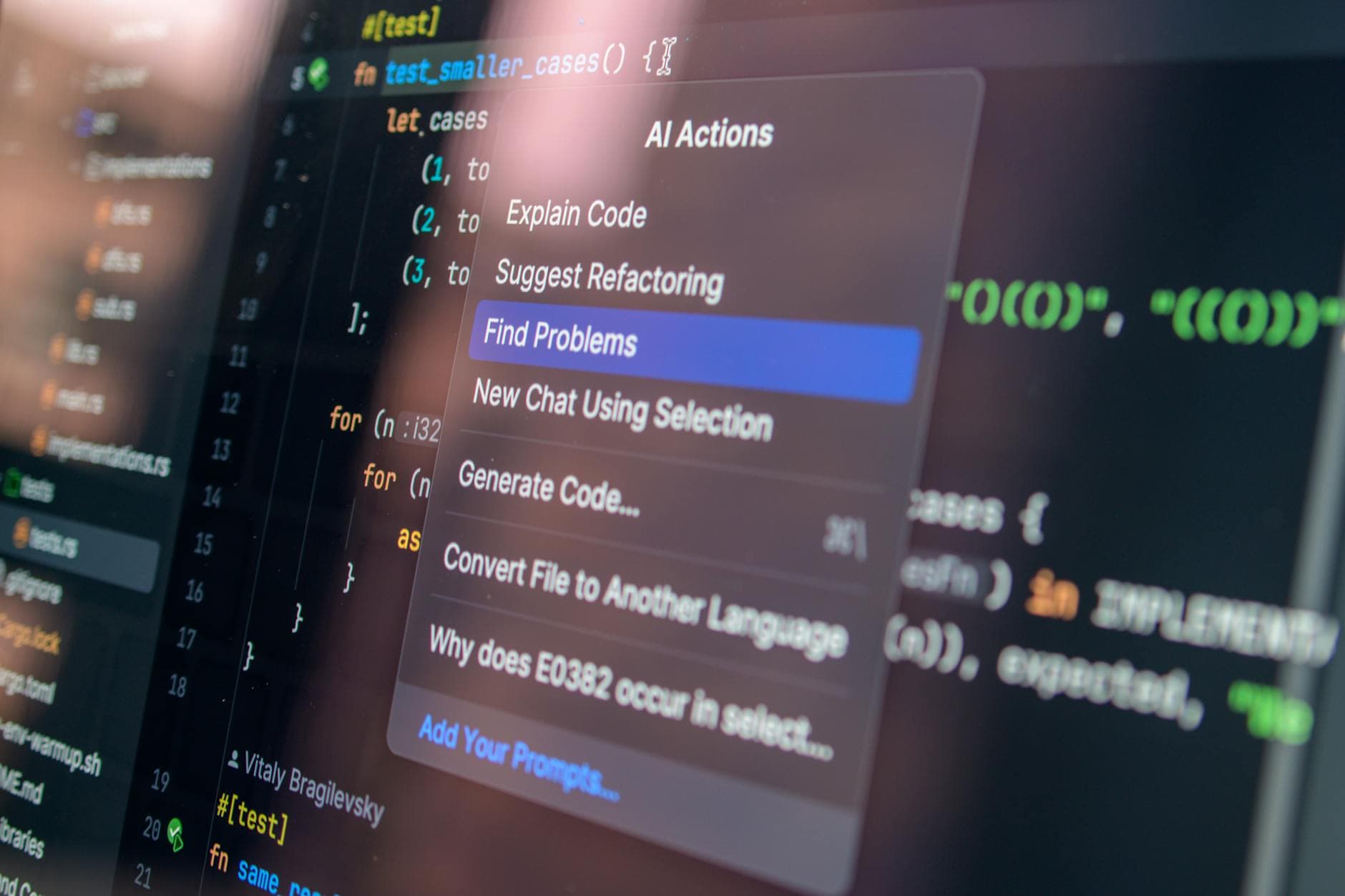

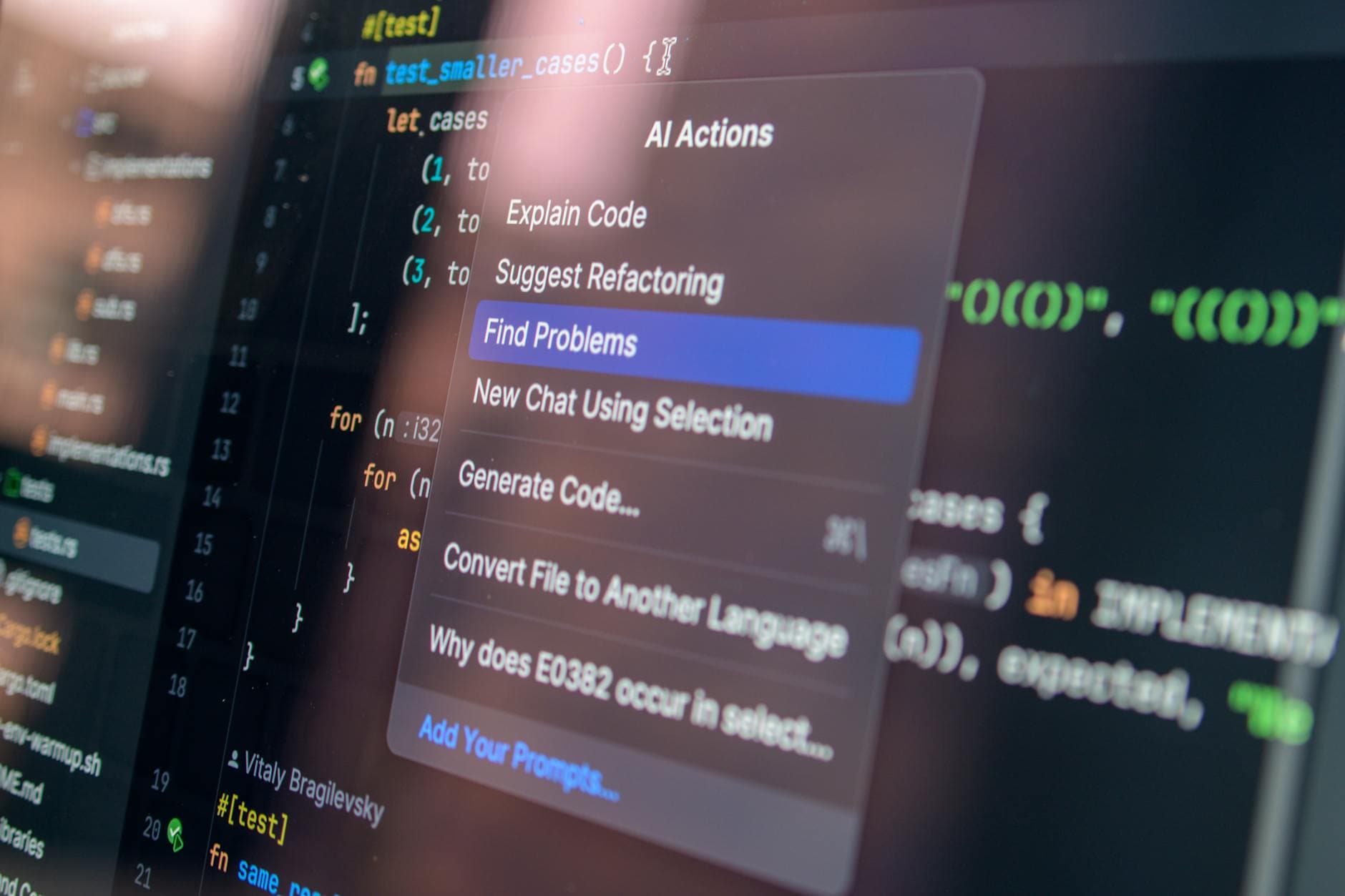

5) Treat prompts, tools, and policies as code

- Version prompts with tests; use JSON Schemas to force structure.

- Register tools with contracts; validate inputs at the edge.

- Use safety templates and moderation gates per region and audience.

6) Evaluate relentlessly

Stand up offline suites with golden prompts, adversarial cases, and policy checks. In production, capture feedback signals, compare candidates via A/B or multi-armed bandits, and alert when quality drifts or latency budgets are breached.

7) Security, governance, and cost control

- PII redaction at ingress; encrypt context; rotate secrets.

- Per-tenant keys and quotas; granular RBAC across services.

- Token and time budgets per workflow; cache embeddings aggressively.

- Data residency via region pinning; full audit logs for regulators.

8) Delivery, reviews, and operations

Automate IaC, CI/CD, and policy-as-code from day one. Institute code review and technical audit services focused on prompt diffs, data lineage, and model governance. For teams that need velocity, slashdev.io connects you with vetted remote engineers who have shipped LLM features in production-grade stacks.

9) Scenario playbook

- Editorial copilot: Claude drafts briefs using fresh retrieval; Gemini checks facts against Search; Grok streams social tone insights.

- Ad review: Event-driven moderation, policy engine gates, and tool-based appeals workflow with human-in-the-loop.

- Support triage: Summaries to CRM, next-best-action via tools, and multilingual responses with guardrails.

10) Migration path

- Weeks 0-2: Proof of value with one use case, thin RAG, and guarded prompts.

- Weeks 3-12: Production pilot with serverless pipelines, evals, and budget caps.

- Quarter 2+: Scale to two more domains; add model routing and enterprise SSO.

11) Pitfalls to avoid

- Monolithic prompts that hide business logic; prefer composable tools.

- Retrieval without access controls; enforce permissions at query time.

- Zero observability; ship tracing, metrics, and replay from day one.

- Chasing model hype; measure ROI per workflow weekly.

Closing guidance

Treat LLMs like any critical dependency: version them, test them, and abstract them. With disciplined serverless architecture, ruthless evaluation, and strong governance, your teams can add intelligence to applications without adding fragility. Start small, wire for change, and let results-not demos-drive the roadmap.

Executive checklist for launch readiness

- Defined KPI tree linked to revenue, cost, risk, and brand safety.

- Signed data contracts, retention policies, and SEO/marketing content governance.

- Cloud spend guardrails, rate limits, fallbacks, and disaster recovery rehearsed.

- Human review workflows calibrated; sampling rates tuned for campaign launches.

- Third-party audits scheduled; red teams exercise prompts, tools, and policies.

Ship, learn, iterate, and scale what the numbers prove fast.